NeuroScaler

Enable neural-enhanced video streaming at scale

Summary

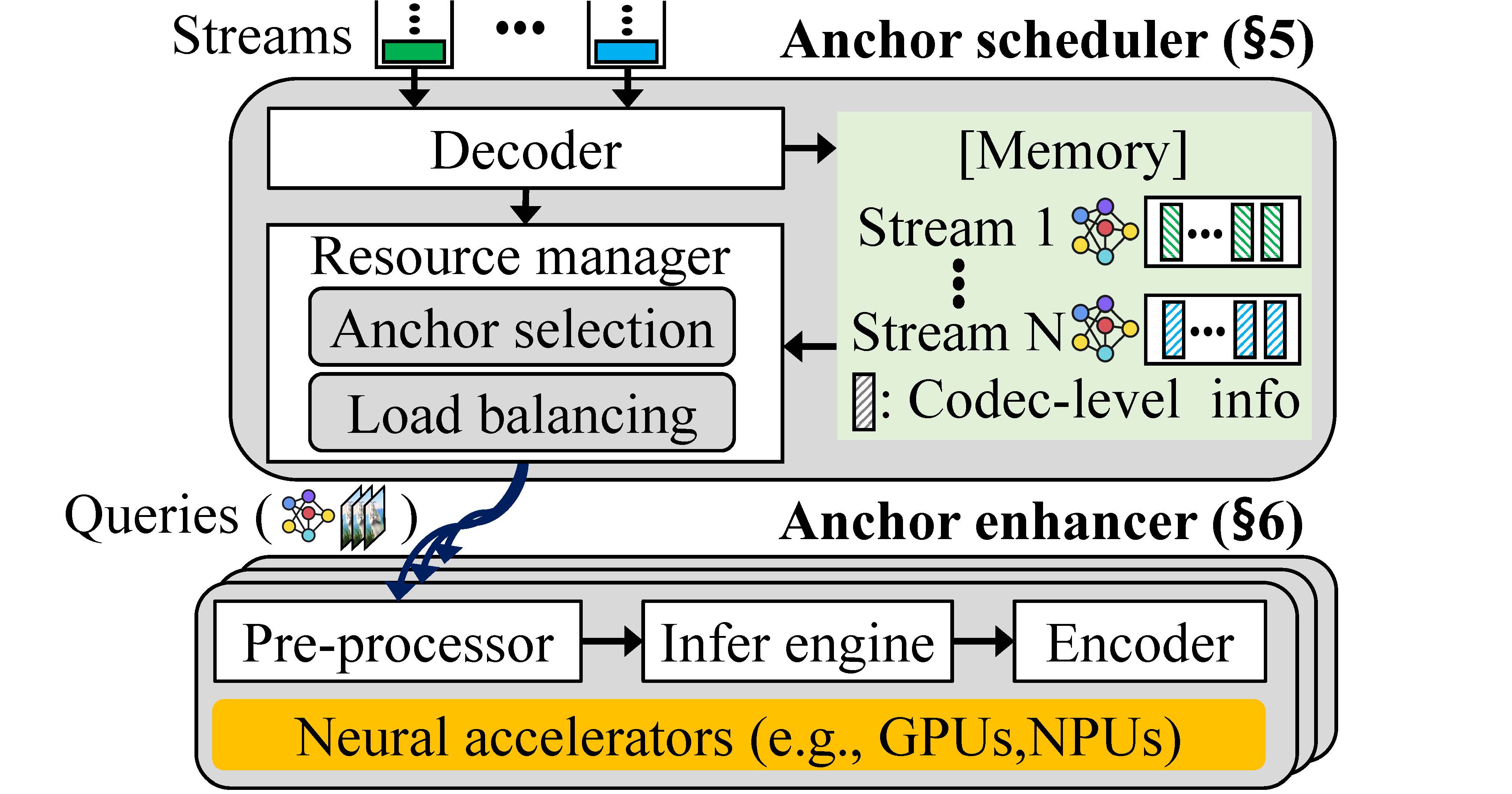

High-definition live streaming has experienced tremendous growth. However, the video quality of live video is often limited by the streamer’s uplink bandwidth. Recently, neural-enhanced live streaming has shown great promise in enhancing the video quality by running neural super-resolution at the ingest server. Despite its benefit, it is too expensive to be deployed at scale. To overcome the limitation, we present NeuroScaler, a framework that delivers efficient and scalable neural enhancement for live streams. First, to accelerate end-to-end neural enhancement, we propose novel algorithms that significantly reduce the overhead of video super-resolution, encoding, and GPU context switching. Second, to maximize the overall quality gain, we devise a resource scheduler that considers the unique characteristics of the neural-enhancing workload. Our evaluation on a public cloud shows NeuroScaler reduces the overall cost by 22.3× and 3.0-11.1× compared to the latest per-frame and selective neural-enhancing systems, respectively.